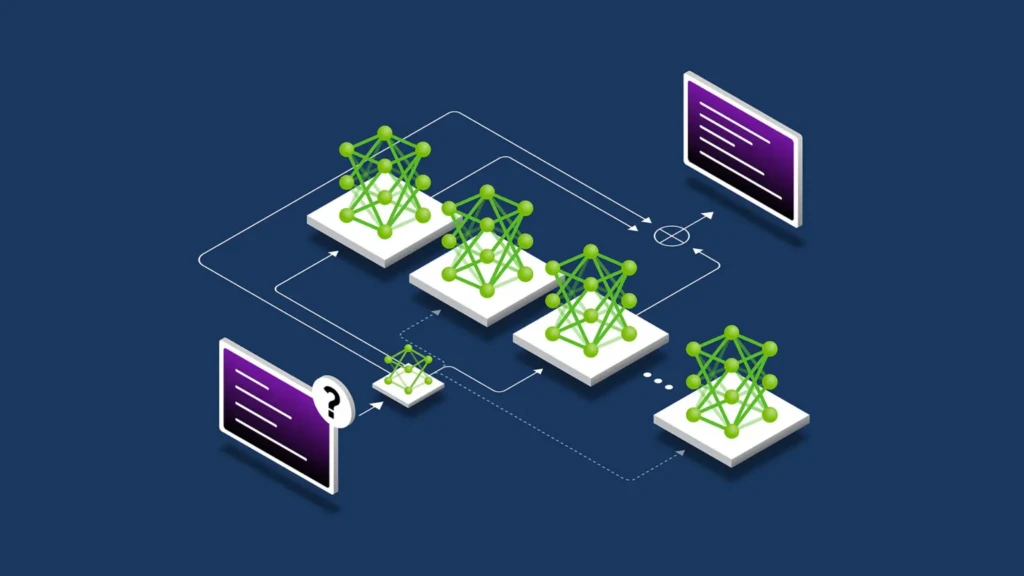

A Mixture of Experts (MoE) model is a sophisticated machine learning architecture that divides a complex problem into smaller, specialized sub-tasks, each handled by an “expert” network. This approach allows the model to allocate computational resources more efficiently, activating only the necessary experts for a given input. The result is a scalable and efficient system capable of handling large-scale tasks with reduced computational overhead.

The Core Components of a Mixture of Experts Model

1. Experts: Specialized Sub-Networks

In an MoE model, the “experts” are individual neural networks trained to specialize in specific aspects of the input data. Each expert learns to handle a subset of the problem, allowing the model to tackle complex tasks by leveraging specialized knowledge.

2. Gating Network: Dynamic Expert Selection

The gating network plays a crucial role in MoE models by determining which expert(s) should process a given input. It evaluates the input and assigns weights to the experts, effectively deciding which ones are most suited to handle the task at hand. This dynamic selection process ensures that the model utilizes its resources efficiently, activating only the relevant experts.

3. Output Combination: Integrating Expert Contributions

After the gating network selects the appropriate experts, their outputs are combined to produce the final result. This combination can be achieved through various methods, such as weighted averaging, where the contributions of each expert are scaled according to their assigned weights. The integration process ensures that the diverse insights from specialized experts are synthesized into a coherent output.

Advantages of Mixture of Experts Models

1. Enhanced Efficiency

By activating only a subset of experts for each input, MoE models significantly reduce computational costs compared to traditional models that engage all parameters for every task. This selective activation leads to faster processing times and lower energy consumption.

2. Scalability

MoE models can scale effectively by adding more experts to handle increased complexity or data volume. Since only a few experts are active at any given time, the model can grow in capacity without a proportional increase in computational demands.

3. Specialization

The division of labor among experts allows each to specialize in a particular aspect of the problem, leading to more accurate and nuanced understanding and predictions. This specialization is particularly beneficial in complex tasks requiring diverse expertise.

Applications of Mixture of Experts Models

1. Natural Language Processing (NLP)

In NLP tasks, MoE models can handle various linguistic nuances by assigning different experts to process different languages, dialects, or linguistic structures. This capability enables more accurate translations, sentiment analysis, and language generation.

2. Computer Vision

For image recognition and processing, MoE models can assign experts to specialize in detecting specific objects, textures, or patterns, leading to improved accuracy in image classification and object detection tasks.

3. Speech Recognition

In speech recognition systems, MoE models can allocate experts to handle different accents, speech patterns, or noise conditions, enhancing the system’s ability to accurately transcribe spoken language.

Challenges and Considerations

1. Expert Coordination

Ensuring that experts collaborate effectively without redundancy or conflict is a significant challenge. Poor coordination can lead to inconsistent outputs and reduced model performance.

2. Load Balancing

Distributing inputs evenly among experts is crucial to prevent overloading some experts while underutilizing others. Imbalances can lead to inefficiencies and degraded performance.

3. Training Complexity

Training MoE models involves complex optimization processes, as the gating network must learn to assign inputs to the appropriate experts, and experts must learn their specialized tasks. This complexity can lead to longer training times and the need for advanced techniques to ensure convergence.

Future Directions

The development of Mixture of Experts models is ongoing, with researchers exploring various enhancements to improve their performance and applicability. Future advancements may include more sophisticated gating mechanisms, better load balancing strategies, and integration with other machine learning paradigms to create more robust and versatile models.

FAQs

1. How does a Mixture of Experts model improve computational efficiency?

A Mixture of Experts model improves computational efficiency by activating only a subset of specialized experts for each input, reducing the overall computational load compared to models that engage all parameters for every task.

2. What role does the gating network play in a Mixture of Experts model?

The gating network in a Mixture of Experts model evaluates the input and assigns weights to the experts, determining which ones are most suited to process the given task.

3. Can Mixture of Experts models be applied to all machine learning tasks?

While Mixture of Experts models are versatile and can be applied to various machine learning tasks, their effectiveness depends on the complexity of the task and the ability to divide it into specialized sub-tasks.

4. What are the main challenges in implementing Mixture of Experts models?

The main challenges in implementing Mixture of Experts models include ensuring effective expert coordination, balancing the load among experts, and managing the complexity of training the model.

5. How does a Mixture of Experts model handle large-scale data?

A Mixture of Experts model handles large-scale data by scaling its capacity through the addition of more experts, activating only a few at a time, which allows it to process large volumes of data efficiently without a proportional increase in computational demands.

Conclusion

The Mixture of Experts model represents a significant advancement in machine learning, offering a scalable and efficient approach to handling complex tasks. By leveraging specialized sub-networks and dynamic expert selection, MoE models can achieve high performance while managing computational resources effectively. As research continues, further refinements are expected to enhance their capabilities and broaden their applicability across various domains.